Months after TikTok was hauled into its first-ever major congressional hearing over platform safety, the company is today announcing a series of policy updates and plans for new features and technologies aimed at making the video-based social network a safer and more secure environment, particularly for younger users. The changes attempt to address some concerns raised by U.S. senators during their inquiries into TikTok’s business practices, including the prevalence of eating disorder content and dangerous hoaxes on the app, which are particularly harmful to teens and young adults. In addition, TikTok is laying out a roadmap for addressing other serious issues around hateful ideologies with regard to LGBTQ and minor safety — the latter which will involve having creators designate the age-appropriateness of their content.

TikTok also said it’s expanding its policy to protect the “security, integrity, availability, and reliability” of its platform. This change follows recent news that the Biden administration is weighing new rules for Chinese apps to protect U.S. user data from being exploited by foreign adversaries. The company said it will open cyber incident monitoring and investigative response centers in Washington, D.C., Dublin and Singapore this year, as part of this expanded effort to better prohibit unauthorized access to TikTok content, accounts, systems and data.

Another one of the bigger changes ahead for TikTok is a new approach to age-appropriate design — a topic already front of mind for regulators.

In the U.K., digital services aimed at children now have to abide by legislative standards that address children’s privacy, tracking, parental controls, the use of manipulative “dark patterns” and more. In the U.S., meanwhile, legislators are working to update the existing children’s privacy law (COPPA) to add more protection for teens. TikTok already has different product experiences for users of different ages, but it now wants to also identify which content is appropriate for younger and older teens versus adults.

Image Credits: TikTok’s age-appropriate design

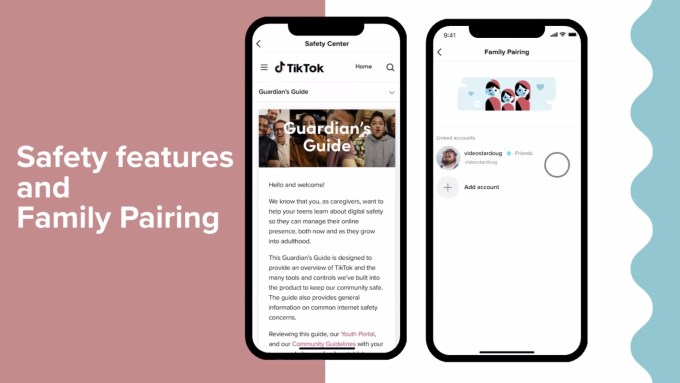

TikTok says it’s developing a system to identify and restrict certain types of content from being accessed by teens. Though the company isn’t yet sharing specific details about the new system, it will involve three parts. First, community members will be able to choose which “comfort zones” — or levels of content maturity — they want to see in the app. Parents and guardians will also be able to use TikTok’s existing Family Pairing parental control feature to make decisions on this on behalf of their minor children. Finally, TikTok will ask creators to specify when their content is more appropriate for an adult audience.

Image Credits: TikTok’s Family Pairing feature

“We’ve heard directly from our creators that they sometimes have a desire to only reach a specific older audience. So, as an example, maybe they’re creating a comedy that has adult humor, or offering kind of boring workplace tips that are relevant only to adults. Or maybe they’re talking about very difficult life experiences,” explained Tracy Elizabeth, TikTok’s U.S. head of Issue Policy, who oversees minor safety for the platform, in a briefing with reporters. “So given those varieties of topics, we’re testing ways to help better empower creators to reach the intended audience for their specific content,” she noted.

Elizabeth joined TikTok in early 2020 to focus on minor safety and was promoted into her new position in November 2021, which now sees her overseeing the Trust & Safety Issue Policy teams, including Minor Safety, Integrity & Authenticity, Harassment & Bullying, Content Classification and Applied Research teams. Before TikTok, she spent over three and half years at Netflix, where she helped the company establish its global maturity ratings department. That work will inform her efforts at TikTok.

But, Elizabeth notes, TikTok won’t go as far as having “displayable” ratings or labels on TikTok videos, which would allow people to see the age-appropriate nature of a given piece of content at a glance. Instead, TikTok will rely on categorization on the back end, which will lean on having creators tag their own content in some way. (YouTube takes a similar approach, as it asks creators to designate whether their content is either adult or “made for kids,” for example.)

TikTok says it’s running a small test in this area now, but has nothing yet to share publicly.

“We’re not in the place yet where we’re going to introduce the product with all the bells and whistles. But we will experiment with a very small subset of user experiences to see how this is working in practice, and then we will make adjustments,” Elizabeth noted.

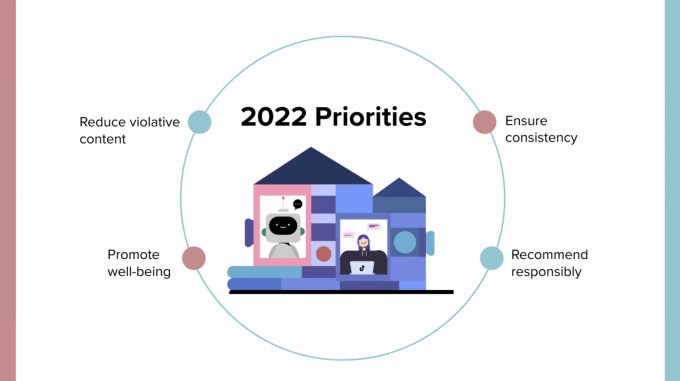

Image Credits: TikTok

TikTok’s updated content policies

In addition to its plans for a content maturity system, TikTok also announced today it’s revising its content policies in three key areas: hateful ideologies, dangerous acts and challenges, and eating disorder content.

While the company had policies addressing each of these subjects already, it’s now clarifying and refining these policies and, in some cases, moving them to their own category within its Community Guidelines in order to provide more detail and specifics as to how they’ll be enforced.

In terms of hateful ideologies, TikTok is adding clarity around prohibited topics. The policy will now specify that practices like deadnaming and misgendering, misogyny or content supporting or promoting conversion therapy programs will not be permitted. The company says these subjects were already prohibited, but it heard from creators and civil society organizations that its written policies should be more explicit. GLAAD, which worked with TikTok on the policy, shared a statement from its CEO Sarah Kate in support of the changes, noting that this “raises the standard for LGBTQ safety online” and “sends a message that other platforms which claim to prioritize LGBTQ safety should follow suit with substantive actions like these,” she said.

Another policy being expanded focuses on dangerous acts and challenges. This is an area the company recently addressed with an update to its Safety Center and other resources in the wake of upsetting, dangerous and even fatal viral trends, including “slap a teacher,” the blackout challenge and another that encouraged students to destroy school property. TikTok denied hosting some of this content on its platform, saying for example, that it found no evidence of any asphyxiation challenges on its app, and claiming “slap a teacher” was not a TikTok trend. However, TikTok still took action to add more information about challenges and hoaxes to its Safety Center and added new warnings when such content was searched for on the app, as advised by safety experts and researchers.

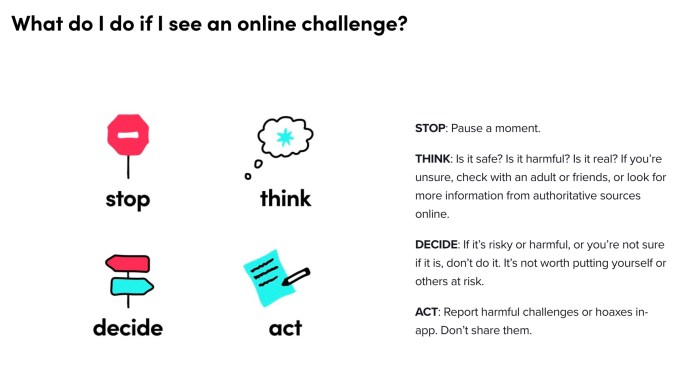

Today, TikTok says dangerous acts and challenges will also be broken out into its own policy, and it will launch a series of creator videos as part of a broader PSA-style campaign aimed at helping TikTok’s younger users better assess online content. These videos will relay the message that users should “Stop, Think, Decide, and Act,” when they come across online challenges — meaning, take a moment to pause, consider whether the challenge is real (or check with an adult, if unsure), decide if it’s risky or harmful, then act by reporting the challenge in the app, and by choosing not share it.

Image Credits: TikTok

On the topic of eating disorder content — a major focus of the congressional hearing not only for TikTok, but also for other social networks like Instagram, YouTube and Snapchat — TikTok is taking more concrete steps. The company says it already removes “eating disorder” content, like content that glorifies bulimia or anorexia, but it will now broaden its policy to restrict the promotion of “disordered eating” content. This term aims to encompass other early-stage signs that can later lead to an eating disorder diagnosis, like extreme calorie counting, short-term fasting and even over-exercise. This is a more difficult area for TikTok to tackle because of the nuance involved in making these calls, however.

The company acknowledges that some of these videos may be fine by themselves, but it needs to examine what sort of “circuit breakers” can be put into place when it sees people becoming trapped in filter bubbles where they’re consuming too much of this sort of content. This follows on news TikTok announced in December, where the company shared how its product team and trust and safety team began collaborating on features to help “pop” users’ filter bubbles in order to lead them, by way of recommendations, into other areas for a more diversified experience.

While this trio of policy updates sounds good on paper, enforcement here is critical — and difficult. TikTok has had guidelines against some of this content, but misogyny and transphobic content have slipped through the cracks, repeatedly. At times, violative content was even promoted by TikTok’s algorithms, according to some tests. This sort of moderation failure is an area where TikTok says it aims to learn from and improve.

“At TikTok, we firmly believe that feeling safe is what enables everybody’s creativity to truly thrive and shine. But well-written, nuanced and user-first policies aren’t the finish line. Rather, the strength of any policy lies in enforceability,” said TikTok’s policy director for the U.S. Trust & Safety team, Tara Wadhwa, about the updates. “We apply our policies across all the features that TikTok offers, and in doing so, we absolutely strive to be consistent and equitable in our enforcement,” she said.

At present, content goes through technology that’s been trained to identify potential policy violations, which results in immediate removal if the technology is confident the content is violative. Otherwise, it’s held for human moderation. But this lag time impacts creators, who don’t understand why their content is held for hours (or days!) as decisions are made, or why non-violative content was removed, forcing them to submit appeals. These mistakes — which are often attributed to algorithmic or human errors — can make the creator feel personally targeted by TikTok.

To address moderation problems, TikTok says it’s invested in specialized moderator training in areas like body positivity, inclusivity, civil rights, counter speech and more. The company claims around 1% of all videos uploaded in the third quarter of last year — or 91 million videos — were removed through moderation policies, many before they ever received views. The company today also employs “thousands” of moderators, both as full-time U.S. employees as well as contract moderators in Southeast Asia, to provide 24/7 coverage. And it runs post-mortems internally when it makes mistakes, it says.

However, problems with moderation and policy enforcement become more difficult with scale as there is simply more content to manage. And TikTok has now grown big enough to be cutting into Facebook’s growth as one of the world’s largest apps. In fact, Meta just reported Facebook saw its first-ever decline in users in the fourth quarter, which it blamed, in part, on TikTok. As more young people turn to TikTok as their preferred social network, it will be pressed upon to not just say the right things, but actually get these things right.

Source : TikTok updates its policies with focus on minor and LGBTQ safety, age appropriate content and more